Filipino text classification by Universal Language Model Fine-tuning (ULMFiT)

Abstract

One of the major obstacles in natural language processing is the scarcity of labeled data for some languages. To tackle this issue, transfer learning techniques like Universal Language Model Fine-tuning (ULMFiT) have emerged as effective solutions. This research paper explores the utilization of ULMFiT for addressing text classification challenges in the Filipino language. We follow the ULMFiT approach, involving pretraining a language model, fine-tuning it, and developing a text classifier. We independently reproduce previous results for a binary text classification task on a dataset of text in Filipino. Additionally, we demonstrate the promising performance of the ULMFiT model on a multi-label classification task, achieving hamming losses as low as ~0.10, which are comparable to previous benchmark results obtained with transformer models.

Downloads

Issue

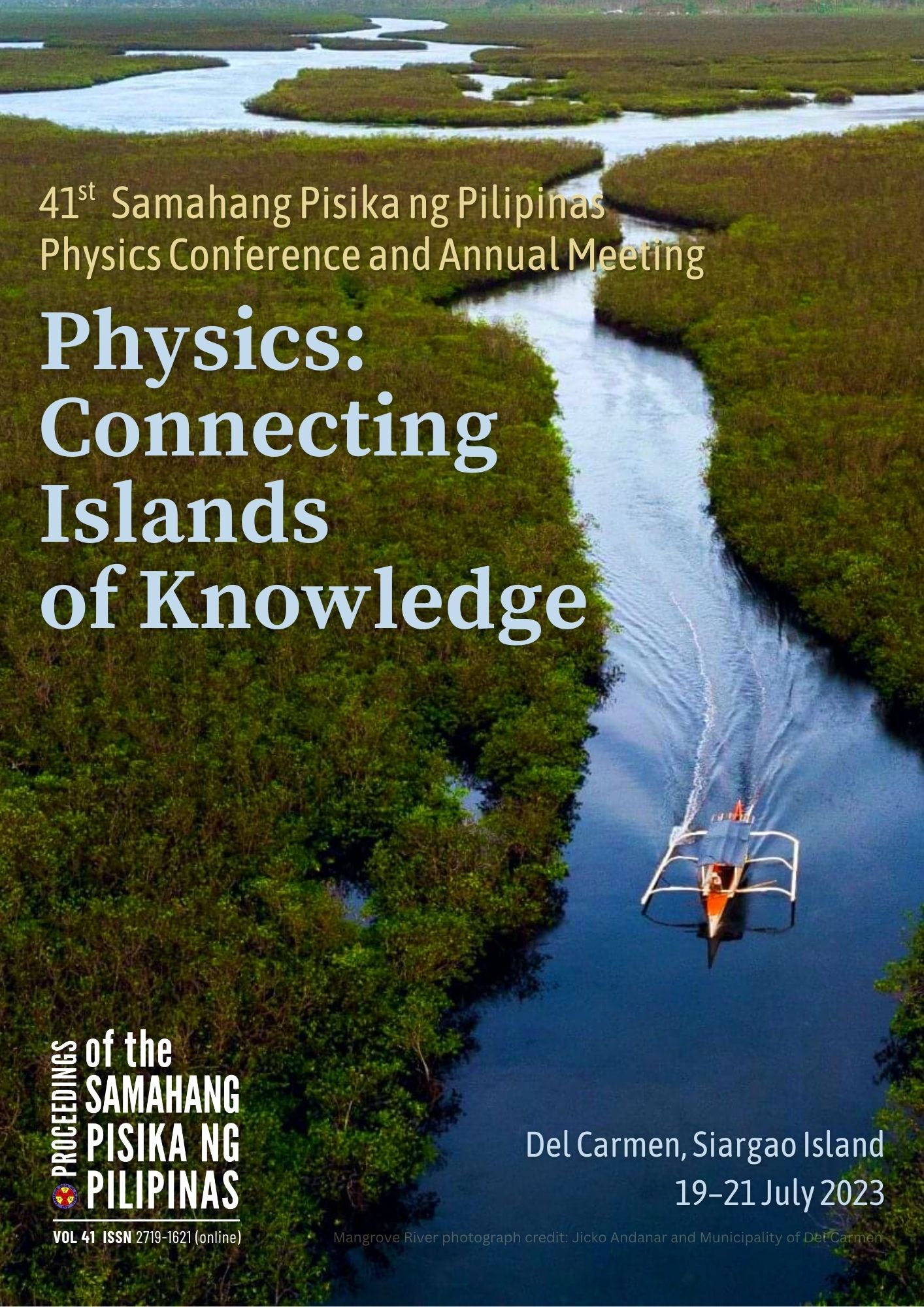

Physics: Connecting islands of knowledge

19-21 July 2023, Del Carmen, Siargao Island

Please visit the SPP2023 activity webpage for more information on this year's Physics Congress.