Einstein: A proposed activation function for convolutional neural network (CNN) in image classification and its comparison to ReLU, Leaky ReLU, and Tanh

Abstract

Activation functions (AFs) are integral in enabling convolutional neural networks (CNNs) to perform image classification and recognition effectively. Here, we propose a new AF called "Einstein"— a novel, piecewise, and dynamic AF defined by parameter r. Einstein is inspired by our earlier work on analogies with Einstein's theory of special relativity, whose equation "morphs to its present form" when we apply it to AF. Our goal is to optimize Einstein by measuring its performance accuracies under CNN for different values of r. Einstein outperforms well-established AFs, like (i) Tanh and (ii) Leaky ReLU when r-value is from 0.80 to 1.00, and (iii) ReLU when r-values are 0.93, 0.96, and 1.00.

Downloads

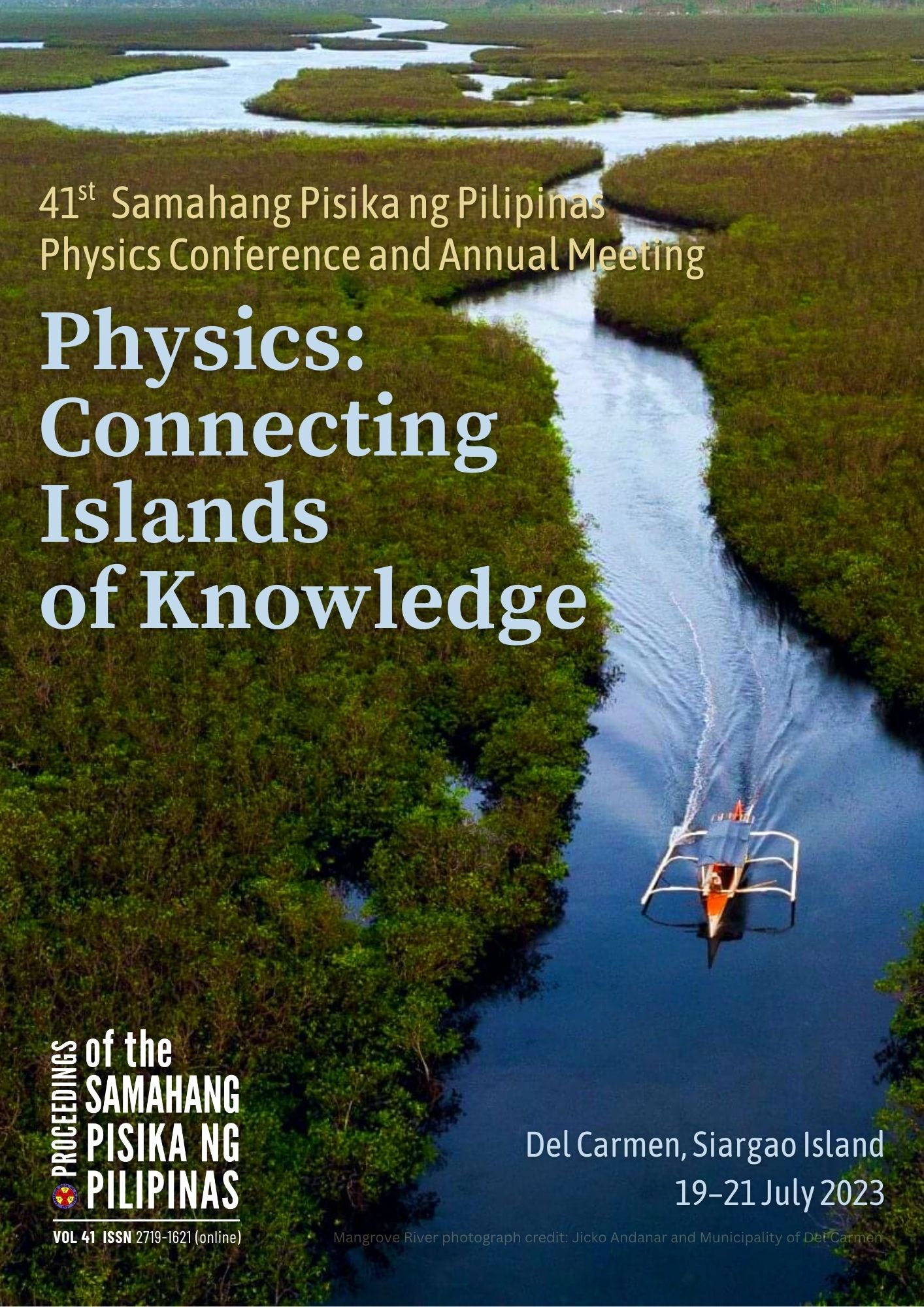

Issue

Physics: Connecting islands of knowledge

19-21 July 2023, Del Carmen, Siargao Island

Please visit the SPP2023 activity webpage for more information on this year's Physics Congress.